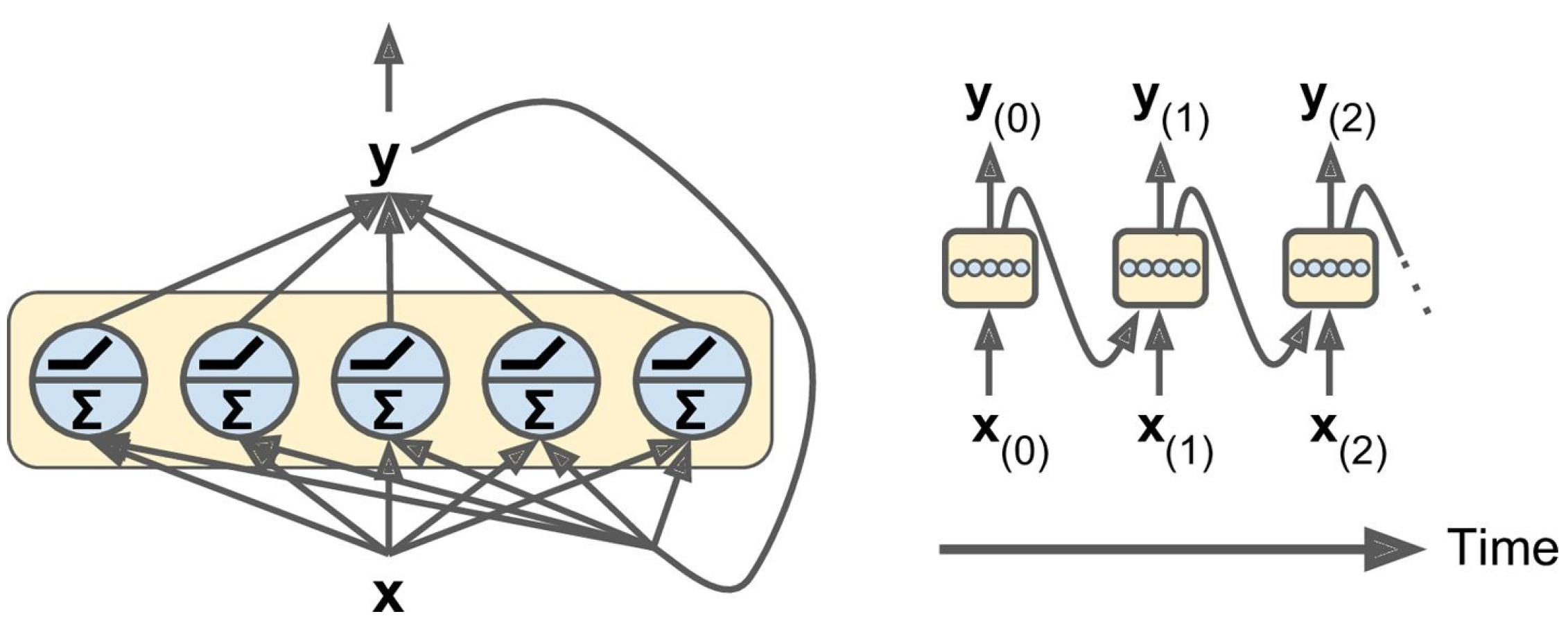

A layer of recurrent neurons (left), unrolled through time (right)

You can easily create a layer of recurrent neurons. At each time step t, every neuron receives both the input vector \( x(t) \) and the output vector from the previous time step \( y(t–1) \), as shown in figure here.